Protect AI Infrastructure

Protect your enterprise from LLM hijacking

Threat actors are using AWS credentials to do a lot more than crypto-mining or mail abuse. They're hijacking corporate GenAI infrastructure to host their own LLM applications.

The New Frontier of Resource Hijacking

Attackers are racking up large compute bills running their own LLM instances on victim infrastructure. It's often difficult to detect when your AI infrastructure is being compromised and without prompt logging, it's difficult to monitor malicious behavior.

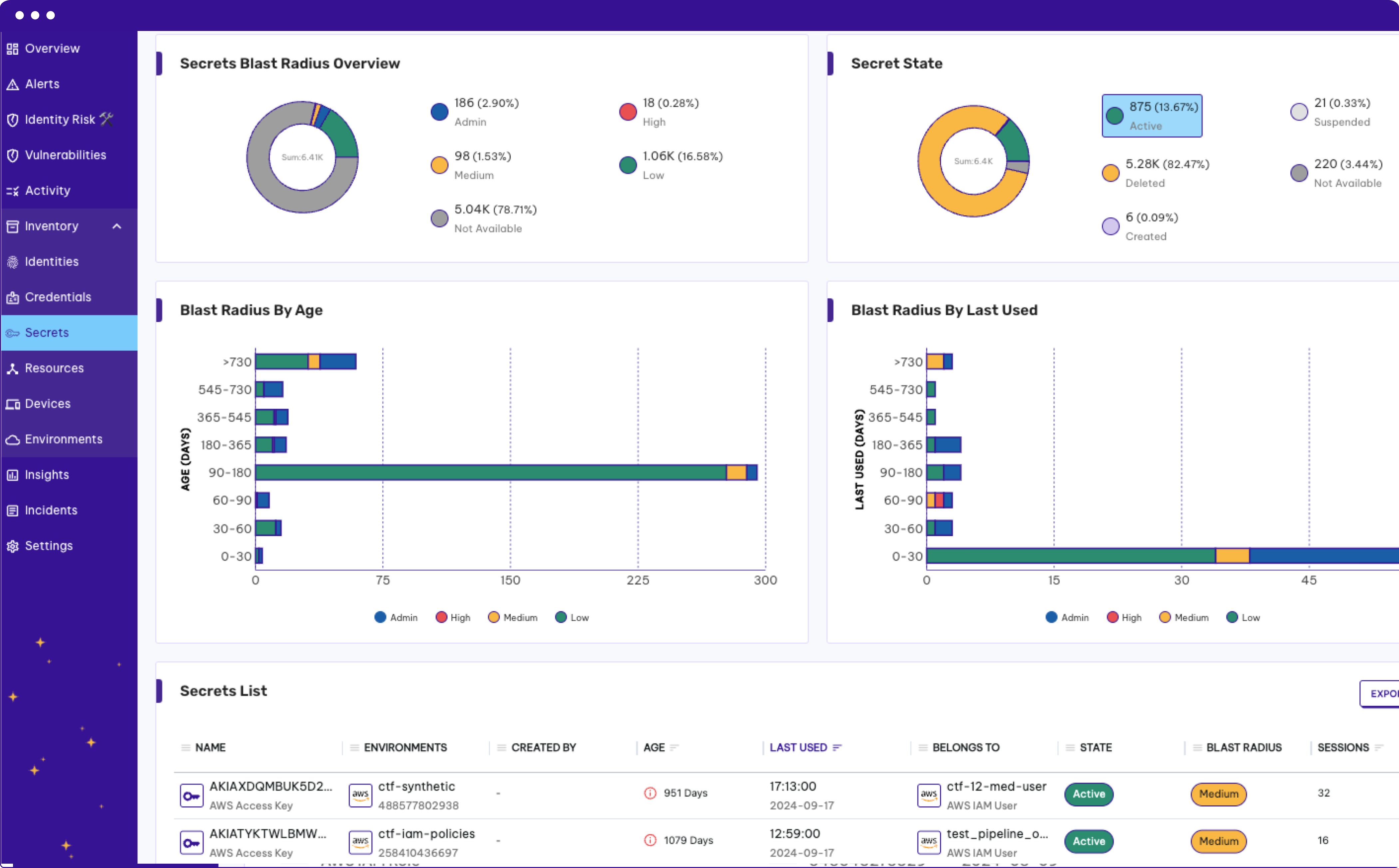

Detect LLM Hijacking

Permiso monitors the credentials and access to your LLM instances. We also detect suspicious and malicious activity in your LLM instances by monitoring your prompt logs to detect LLM hijacking quickly.

Why Traditional Tools Fail Against Modern Attacks

Security teams have attempted to leverage a combination of existing technologies like SIEM, CNAPP and CWP to try and solve the cloud threat detection problem. These tools weren't built to solve the modern attacks observed today where attackers hop between cloud environments and mask themselves with valid credentials.

Traditional tools have siloed focus (IaaS only), are event driven (noisy and high volume), and lack identity attribution context to expedite investigation time.

Want to see more?

.png)